Synopsis

DNA forms genes, which encode proteins – which, together, build modular, hierarchically organized multicellular organisms. The more we ethically and efficiently leverage technically advanced AI algorithms to probe and predict these key players of the game of life, the better we can improve current species and design new ones which are destined to thrive – now and into the future.

Inside This Article:

- What are the multiple layers of developmental biology?

- Why are proteins so important?

- How is AI leveraged to 1) predict protein function, 2) design new proteins, and 3) better understand and guide biodevelopment?

- How are such methods leading to key biological insights?

- What are some key considerations in so doing?

Palimpsestic Proteins

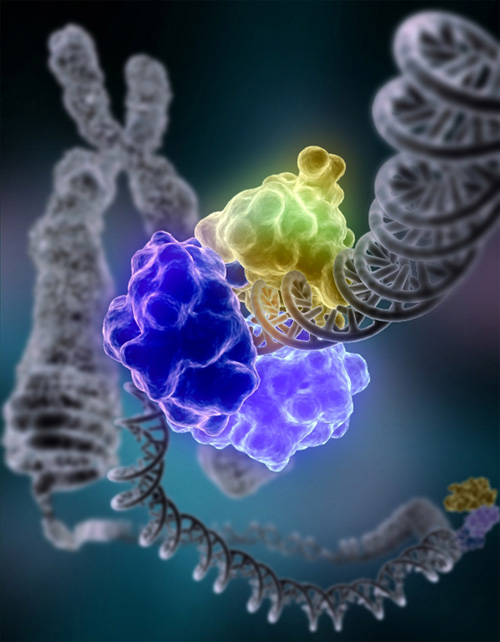

Dating back to 1957, the central dogma of biology – reflecting the directional flow of information for the blueprint of life – stems from the work of Francis Crick: DNA in the form of genes is transcribed into RNA, which is translated into proteins, from Greek prōtos for “first” (1) – the building blocks of complex, multicellular organisms.

Amazing AI

Born in the wake of cybernetics and the pioneering computational work of John Von Neumann and Alan Turing of the 1950s, artificial intelligence (AI), as first formally defined in 1956, reflects “the construction of computer programs that engage in tasks that […] require high-level mental processes, such as perceptual learning, memory organization and critical reasoning”. Within AI, machine learning (ML) systems, in contrast to simple rule-based systems (think online chatbots), dynamically learn from data to predict outcomes – and have emerged as particularly well positioned to organize, study, and interpret the biologically valuable and medically tractable information ensconced within our ever-growing, extraordinarily complex vaults of protein data (2).

DIY Proteins

First, how can we predict how a given protein will fold – and therefore function?

PROTEIN FOLDING PREDICTION

The works horses of biological organisms, proteins are born of linear chains of DNA-encoded amino acids which fold into elaborate 3D shapes guiding how they interact with other molecules and exert their biological functions. Researchers think that there exist between 10,000 and several billion different types of proteins across life forms (3,4) – and that for any one protein, the number of theoretically possible configurations could reach, on average, up to 10300 – as established in 1969 by American molecular biologist Cyrus Levinthal as the Levinthal paradox. Complicated to parse, this hidden code has remained a challenge unsolved for decades. How can we leverage new ML tools to predict protein folding patterns? Can this shed light on how form dictates function, in both health and disease?

“It’s such a complicated problem with so many parameters, so many ways to go wrong,” warns structural biologist Andriy Kryshtafovych. Following decades of trial however, the latest global Critical Assessment of protein Structure Prediction (CASP) competition yielded unprecedented success – as DeepMind’s AlphaFold2 was able to leverage troves of protein folding data to predict protein folding patterns with an accuracy of 92.4% and average error of approximately 1.6 Ångströms (i.e. 0.16 nanometers of error in spatial predictions).

AlphaFold2 used novel neural network architectures and training procedures explicitly incorporating the evolutionary, physical and geometric constraints of protein structure. In so doing, its new architecture harnessed multi-sequence alignments to identify co-evolving amino acids likely to physically associate with each other, while using a pattern-recognition tool to pinpoint local patterns likely to underpin a protein’s final folded form (5,6). These strategies were buoyed, in the background, by a neural-network architecture allowing for fluid information exchange between software components – yielding a game-changing result.

AlphaFold2’s success thus circumvents the need for traditional 3D visualization tools such as nuclear magnetic resonance imaging, X-ray crystallography, or cryo-electron microscopy – even for proteins for which no similar structure is known (7,8). To date, protein folding patterns and functions have been predicted for nearly 99% of human proteins currently databased – helping inform the design of new enzymes, antibodies, and other therapeutics. For example, the program was used to predict the potential folding patterns of wolframin, mutations in which result in the rare neurogenerative disorder Wolfram Syndrome (6,9), which can now be investigated in the lab. While the Leventhal paradox itself remains unsolved, the impact of ML on protein folding and function will continue to be “transformative”.

What will the future of this space hold? Primary protein structure predictions will be useful for the representation of higher-order protein structure, including secondary, tertiary, and quaternary – of critical roles in protein function. In addition, this work is likely to shed light on the function of the mysteriously unresolved dark proteome (10).

Second, how can we design novel proteins with specific properties to perform desired functions?

PROTEIN DESIGN AND SYNTHESIS

When designing a new protein from the ground up, the options are gargantuan: The sheer combinatorial size of the sequence space is such that a sequence of n amino acids (of which 20 generally occur naturally in nature) yields over 20n possible sequences! “The design space is just enormous,” explains computational biologist Arvind Ramanathan of Illinois’ Argonne National Laboratory. Can we leverage our increasing familiarity with the language of proteins to design new ones with optimized or novel functions?

In short: Yes. Such is the precise goal of computational protein design (11). Historically, most strategies sought to address the inverse folding problem (12), first identifying a desired protein structure which will have certain key properties, and then designing an amino acid sequence which will naturally fold into this. But today, modular ML in particular is a far more powerful tool to identify patterns linking protein sequence, structure, and function and apply them to protein design.

How so? First, the data representation and processing architecture needs to be well suited to the target problem (12); in the case of protein data for example, specific representations are needed for both the amino acid sequence and the protein structure in order to capture both 1D and 3D information. Second, physiological constraints need to be integrated in a balanced way; for example, the tight binding of a protein may benefit from being perfectly complementary in shape to its target, but poorly placed charges could prevent it from binding in the first place. Third, and relatedly, the training of the model should be as efficient as possible – for example, by first teaching the program that a protein’s relative orientation in space, not its absolute orientation, is what is most critical to its function (13).

What cutting-edge methods are currently showing promise? Recently – intuitively due to the similar evolutionary origins of both language and proteins – languages models based on transformer networks trained for natural language processing (NLP) have been fruitful for protein modeling (14). Training the novel conditional language model ProGen on 280 million protein sequences led it to learn rules on how to generate sequences of amino acids in a structurally-viable manner – reminiscent of how AI language models trained on human languages capture word usage patterns and grammar rules. Subsequently, a sequence of amino acids generated by ProGen was synthesized and surely enough perform its intended function in the “real-world” – acting as a proof of concept that artificial proteins may be just as functional as naturally occurring proteins. In the near future, proteins with any combination of properties could be dreamed up, such as with the ability to bind to another key molecule or the capacity to operate at high temperatures.

Finally, how can we realistically model the network of genes and proteins involved in building – and running – an organism?

PROTEIN NETWORK MODELING

In the end, the genome of a complex organism such as the human body harbors “only” ~20,000 genes – so how do these result in the emergence of extraordinarily complex life, including the action of hundreds of thousands of interacting proteins? Such remains the mysterious g-value paradox (15). In partial answer, complex animals have developed multi-layered gene regulation programs and complex protein-protein interaction networks. As biology graduates from run-of-the-mill single gene-based analyses to broad, polygenic network-based analyses, how can we harness this progression to model protein networks rather than single units? In addition, can protein sequence, structure, and function be harmoniously integrated into network models of ever-growing sophistication?

While most ML models for protein-protein interactions are sequence-based, such as by adopting language models to amino acid sequences, more advanced models are increasingly turning to structure-aware techniques, taking advantage of the contact maps of known proteins. A recent ML model ambitiously sought to incorporate all three protein sequence, structure, and function modalities (16) – creatively encoding protein structure features based on the topology of point clouds of heavy atoms, allowing it to learn 3D structural information about not only the backbones, but also the important side chains of proteins. The result? A remarkably full protein structure representation, unparalleled in the precision of its modeling.

Within the world of proteins, transcription factors are critical regulators of gene expression – key levers in the modulation of hundreds of thousands of downstream genes, underpinning the identity and function of an organism’s cells. Capitalizing on the fact that DNA accessibility patterns in a cell can act as a proxy of transcription factor action, a team developed DeepTFni to infer transcription factor networks from data on single cell DNA accessibility. Not only was the model successful, but it pointed to salient biological changes in transcription factor network architectures – identifying hub transcription factors in tissue development and leukemia tumorigenesis (17). Relatedly, it is now possible to predict what proteins will be transcription factors (18), to identify transcription factor binding sites (19,20), and to derive the function of certain transcription factors (21).

How does this relate to How to Build an Organism? Predictions of cellular gene expression and protein translation profiles are actively being developed in exceptional detail across human embryonic stages (22), and the regulatory landscape of over 1,000 so-called “epigenetic profiles”, reflecting key identity-guiding genes in individual cell or tissue types, has developed to date (23). Spanning time, space, and scale in developmental biology, these advances are paving the way towards a lucid understanding of and ability to manipulate the complex, protein-based, multicellular mosaic that is an organism.

Insights and Actionable Outcomes: A Gateway to Designing Resilient Life Forms

What are some key considerations?

- A good balance between general purpose and task-specific tools will be critical to future models. To this end, inching closer to general self-supervised learning, one group recently developed data2vec, a framework which predicts, instead of modality-specific targets, contextualized latent representations that incorporate entire input information (24). As states DeepMind, “[the] long-term aim is to solve intelligence, developing more general and capable problem-solving systems, known as artificial general intelligence (AGI).”

- Proteomics, but also the “Omics” in general, are reshaping the way we approach biomedicine. DL techniques have already outperformed most methods in terms of specificity, sensitivity, and efficiency (25). The choice of whether and how to use different DL approaches across the Omics will be problem-specific, and, as the FDA puts it, help us continue to “go beyond the reductionist approach”.

- The balanced incorporation of biological priors will be important. Encoding the right amount will ensure a good balance between their precision and their accessibility to the broader community – notably in the form of interpretability, and therefore explainability (26).

What insights will we gain, and applications can we expect?

More and more data is being generated and AI/ML tools developed. The creative combinatorial arrangement of data and methods in functional biology has opened the floodgates to our understanding and manipulation of organisms – from linear genetic sequences to three-dimensional, modular, hierarchically organized protein networks. In immediate need of radical conservation solutions, AI in proteomics and biology in general will guide us in designing the best possible building blocks of life – short-circuiting the long arc of evolution to create brilliantly engineered, strong, and resilient life forms.

Let us harness our newfound knowledge to protect old species, design new ones, and ensure long and resilient life for all. The future as such is ours. Hello, World.

References

- Hartley H. Origin of the word “protein.” Nature. 1951.

- Perez-Riverol Y, Bai M, Da Veiga Leprevost F, Squizzato S, Park YM, Haug K, et al. Discovering and linking public omics data sets using the Omics Discovery Index. Nature Biotechnology. 2017.

- Smith LM, Kelleher NL. Proteoform: A single term describing protein complexity. Nature Methods. 2013.

- Adkins JN, Varnum SM, Auberry KJ, Moore RJ, Angell NH, Smith RD, et al. Toward a human blood serum proteome: analysis by multidimensional separation coupled with mass spectrometry. Mol Cell Proteomics. 2002;

- Jumper J, Evans R, Pritzel A, Green T, Figurnov M, Ronneberger O, et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;

- Tunyasuvunakool K, Adler J, Wu Z, Green T, Zielinski M, Žídek A, et al. Highly accurate protein structure prediction for the human proteome. Nature. 2021;

- Callaway E. “It will change everything”: DeepMind’s AI makes gigantic leap in solving protein structures. Nature. 2020.

- Bileschi ML, Belanger D, Bryant DH, Sanderson T, Carter B, Sculley D, et al. Using deep learning to annotate the protein universe. Nat Biotechnol. 2022;

- Pallotta MT, Tascini G, Crispoldi R, Orabona C, Mondanelli G, Grohmann U, et al. Wolfram syndrome, a rare neurodegenerative disease: From pathogenesis to future treatment perspectives. Journal of Translational Medicine. 2019.

- Heinzinger M, Elnaggar A, Wang Y, Dallago C, Nechaev D, Matthes F, et al. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinformatics. 2019;

- Adolf-Bryfogle J, Teets FD, Bahl CD. Toward complete rational control over protein structure and function through computational design. Current Opinion in Structural Biology. 2021.

- Defresne M, Barbe S, Schiex T. Protein design with deep learning. International Journal of Molecular Sciences. 2021.

- Laine E, Eismann S, Elofsson A, Grudinin S. Protein sequence-to-structure learning: Is this the end(-to-end revolution)? Proteins: Structure, Function and Bioinformatics. 2021.

- Madani A, Krause B, Greene ER, Subramanian S, Mohr BP, Holton JM, et al. Deep neural language modeling enables functional protein generation across families. bioRxiv. 2021;

- Hahn MW, Wray GA. The g-value paradox. Evolution and Development. 2002.

- Xue Y, Liu Z, Fang X, Wang F. Multimodal Pre-Training Model for Sequence-based Prediction of Protein-Protein Interaction. 2021 Dec 9;

- Li H, Sun Y, Hong H, Huang X, Tao H, Huang Q, et al. Inferring transcription factor regulatory networks from single-cell ATAC-seq data based on graph neural networks. Nat Mach Intell 2022 44. 2022 Apr 11;4(4):389–400.

- Kim GB, Gao Y, Palsson BO, Lee SY. DeepTFactor: A deep learning-based tool for the prediction of transcription factors. Proc Natl Acad Sci U S A. 2021 Jan 12;118(2).

- Chen C, Hou J, Shi X, Yang H, Birchler JA, Cheng J. DeepGRN: prediction of transcription factor binding site across cell-types using attention-based deep neural networks. BMC Bioinformatics. 2021;

- Zheng A, Lamkin M, Zhao H, Wu C, Su H, Gymrek M. Deep neural networks identify sequence context features predictive of transcription factor binding. Nat Mach Intell. 2021;

- Gupta C, Ramegowda V, Basu S, Pereira A. Using Network-Based Machine Learning to Predict Transcription Factors Involved in Drought Resistance. Front Genet. 2021;

- Chen L, Pan XY, Guo W, Gan Z, Zhang YH, Niu Z, et al. Investigating the gene expression profiles of cells in seven embryonic stages with machine learning algorithms. Genomics. 2020;

- Xia B, Zhao D, Wang G, Zhang M, Lv J, Tomoiaga AS, et al. Machine learning uncovers cell identity regulator by histone code. Nat Commun. 2020;

- Baevski A, Hsu W-N, Xu Q, Babu A, Gu J, Auli M. data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language. 2022 Feb 7;

- Min S, Lee B, Yoon S. Deep learning in bioinformatics. Briefings in bioinformatics. 2017.

- Bepler T, Berger B. Learning the protein language: Evolution, structure, and function. Cell Syst. 2021;